Part 1: Apache kafka basics

Created on: Sep 26, 2024

Apache kafka is distributed event streaming platform. It's essentially a high-performance system for ingesting, processing, and storing streams of records.

Apache kafka main feature.

- Distributed: It runs of cluster of server providing high availability and scalability.

- Persistent: Records are stored durably, allowing for later processing or analysis.

- High throughput: It can handle million of messages in per second.

- Low latency: Message are processed quickly

- Reliable: Kafka ensures data delivery and guarantees at-least-once or exactly-once semantics.

Some Common usage

- Message queuing: Asynchronous communication between systems

- Data integration: Integrating data from various sources.

- Real-time stream processing: Processing and analyzing data streams in real-time.

Let's understand by doing practical. Create a docker compose file and add below in that file and run.

version: "2" services: zookeeper: image: confluentinc/cp-zookeeper:latest hostname: zookeeper container_name: zookeeper ports: - "2181:2181" environment: ZOOKEEPER_CLIENT_PORT: 2181 ZOOKEEPER_TICK_TIME: 2000 kafka1: image: confluentinc/cp-kafka:latest hostname: kafka1 container_name: kafka1 depends_on: - zookeeper ports: - "9092:9092" - "29092:29092" environment: KAFKA_BROKER_ID: 1 KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092,PLAINTEXT_INTERNAL://kafka1:29092 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_INTERNAL:PLAINTEXT KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT_INTERNAL KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3 KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 3 KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 2 kafka2: image: confluentinc/cp-kafka:latest hostname: kafka2 container_name: kafka2 depends_on: - zookeeper ports: - "9093:9093" - "29093:29093" environment: KAFKA_BROKER_ID: 2 KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9093,PLAINTEXT_INTERNAL://kafka2:29093 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_INTERNAL:PLAINTEXT KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT_INTERNAL KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3 KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 3 KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 2 kafka3: image: confluentinc/cp-kafka:latest hostname: kafka3 container_name: kafka3 depends_on: - zookeeper ports: - "9094:9094" - "29094:29094" environment: KAFKA_BROKER_ID: 3 KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9094,PLAINTEXT_INTERNAL://kafka3:29094 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_INTERNAL:PLAINTEXT KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT_INTERNAL KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 3 KAFKA_TRANSACTION_STATE_LOG_REPLICATION_FACTOR: 3 KAFKA_TRANSACTION_STATE_LOG_MIN_ISR: 2

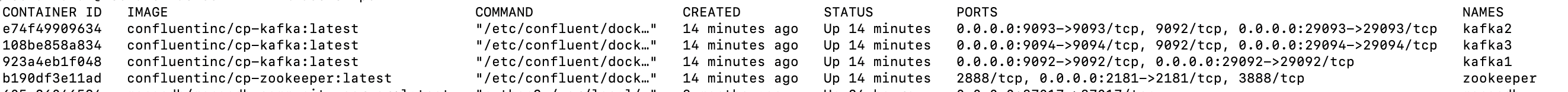

Your container will come something line below

Architecture

Kafka has 4 major component.

- Broker

- Zookeeper

- Producer

- Consumer

Broker

A Kafka broker is a server that stores and manages messages.

- Kafka broker operates as part of a Kafka cluster, which can consist of one or more brokers

- Each broker has its own id.

- Broker store and manage message data.

Zookeeper

It provides a centralized infrastructure for maintaining configuration information, naming, providing distributed synchronization, and providing group services.

With the release of Apache Kafka 3.5, Zookeeper is now marked deprecated. KRaft will replace zookeer as per docs

Producer

A producer in Kafka is an application or service that sends data to the Kafka cluster. It's responsible for producing records (messages) and publishing them to specific topics within the cluster.

- Record Serialization: Producers often serialize records into a specific format (e.g., JSON, Avro) for efficient storage and transmission.

- Partitioning: Producers may use various partitioning strategies to distribute records across partitions within a topic.

- Error Handling: Producers implement mechanisms to handle errors that may occur during record production, such as network failures or connection timeouts.

Let's create a topic order and produce some data.

docker exec -it kafka1 /bin/bash ## Create a topic order kafka-topics --create --topic order --partitions 3 --replication-factor 2 --if-not-exists --bootstrap-server localhost:9092 # fire some message to order topic and check in consumer console kafka-console-producer --broker-list localhost:29092 --topic order

Consumer

A consumer in Kafka is an application or service that receives data from the Kafka cluster.

- Topic Subscription: Consumers subscribe to one or more topics, indicating their interest in receiving records published to those topics.

- Offset Management: Consumers keep track of the offset (position) of the last record they have consumed from each partition of a topic. This allows them to resume consumption from the correct position in case of failures or restarts.

- Error Handling: Consumers implement mechanisms to handle errors that may occur during record consumption, such as network failures or connection timeouts.

# consume order topic kafka-console-consumer --bootstrap-server localhost:9092 --topic order --from-beginning

Consumer groups

Kafka allows consumers to be organized into groups. Consumers within the same group share the workload of consuming records from a topic, ensuring that each record is consumed only once. This provides scalability and fault tolerance.

Topic

A topic in Kafka is a logical grouping of related messages. It's like a channel through which producers send records and consumers receive them.

- Partitioning: Topics are divided into partitions, which are distributed across the Kafka cluster.

- Ordering: Records within a partition are guaranteed to be ordered.

kafka-topics --create --topic order --partitions 4 --replication-factor 3 --if-not-exists --bootstrap-server localhost:9092

Above command create a topic order with 3 partition. To run above command we need to have have at least 3 broker node for replication nodes.

Offset Management

Offset Management in Kafka is a critical mechanism for ensuring that consumers process records exactly once and avoid duplicate or missed messages. An offset is essentially a pointer to the next record a consumer should read from a partition of a topic.

- Kafka stores offsets for each consumer group in ZooKeeper.

- Offset Commit: Consumers periodically commit their offsets to the storage system. This ensures that if a consumer fails, it can resume processing from the last committed offset.

- Consumer can control their offset management using strategy: Automatic commit, manual commit, Sync commit, Async Commit.

Partition

In Apache Kafka, a partition is a way to divide a topic into multiple parts, which are then stored across different brokers in a cluster.

- Scalability: Kafka can scale horizontally because partitions can be spread across multiple nodes in a cluster

- Parallel processing: Multiple consumers and producers can work on a topic simultaneously because the data is split across multiple partitions.

- Data integrity: Messages within a partition are processed in the order they were produced, which helps maintain data consistency

- Fault tolerance: Each partition can have multiple replicas across different brokers, which helps ensure data redundancy.

For every partition, there is a leader broker which receives the message and then replicated to another node. If the existing leader broker fails, a new leader is elected from the follower brokers to ensure continuous availability and data consistency.

Misc commands

# How to check information about any kafka topic order kafka-topics --describe --topic <topic-name> --bootstrap-server <broker-host:port> kafka-topics --describe --topic order --bootstrap-server localhost:9092

Topic: order TopicId: gsY_XQrXS56KQIk-aH4ggw PartitionCount: 1 ReplicationFactor: 2 Configs: Topic: order Partition: 0 Leader: 1 Replicas: 1,2 Isr: 1,2

# Check kafka version kafka-topics --version # list all topic in a kafka kafka-topics --list --bootstrap-server localhost:9092 # delete topic kafka-topics --delete --topic order --bootstrap-server localhost:29092 # alter number of partition kafka-topics --bootstrap-server localhost:29092 --alter --topic order --partitions 4

Zookeeper commands

# connect to zookeeper node docker exec -it zookeeper /bin/bash # connect to zookeeper shell zookeeper-shell localhost:2181 # check all the broker ls /brokers/ids # [1, 2, 3] # check a particular node get /brokers/ids/1